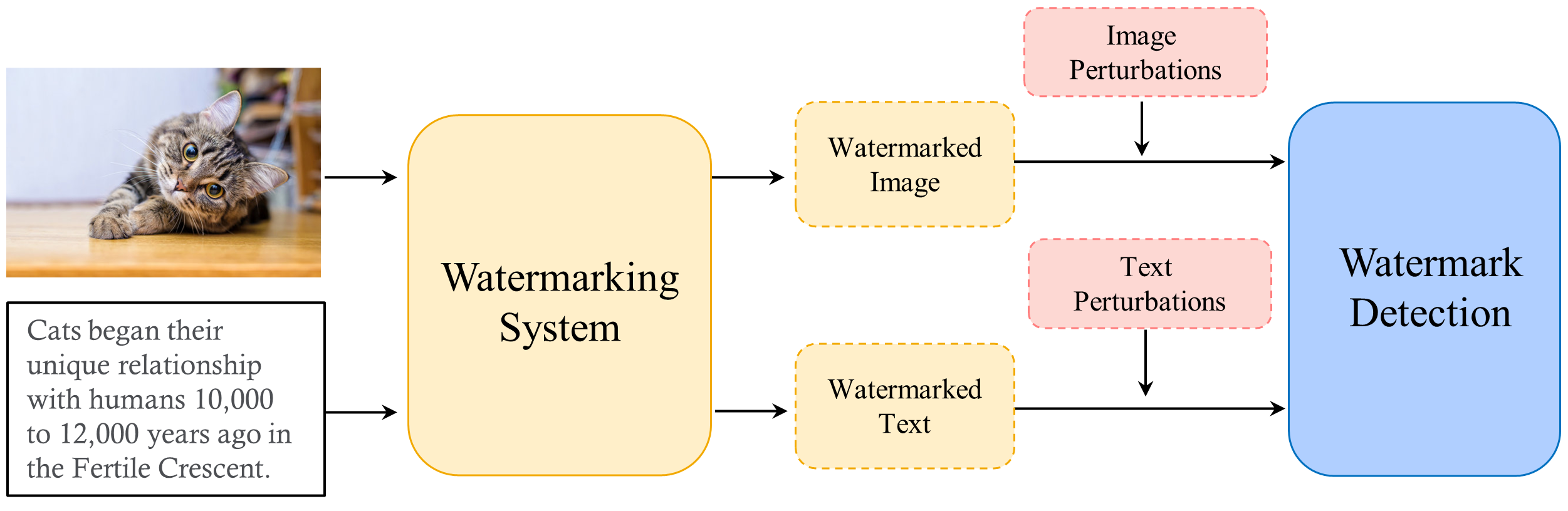

The overall pipeline of our watermarking robustness study. We add watermarks to the generated content and evaluate their robustness under image corruptions and text perturbations.

Abstract

With the development of large models, watermarks are increasingly employed to assert copyright, verify authenticity, or monitor content distribution. As applications become more multimodal, the utility of watermarking techniques becomes even more critical. The effectiveness and reliability of these watermarks largely depend on their robustness to various disturbances. However, the robustness of these watermarks in real-world scenarios, particularly under perturbations and corruption, is not well understood. To highlight the significance of robustness in watermarking techniques, our study evaluated the robustness of image and text generation models against common real-world image corruptions and text perturbations. Our results could pave the way for the development of more robust watermarking techniques in the future.

Benchmark study

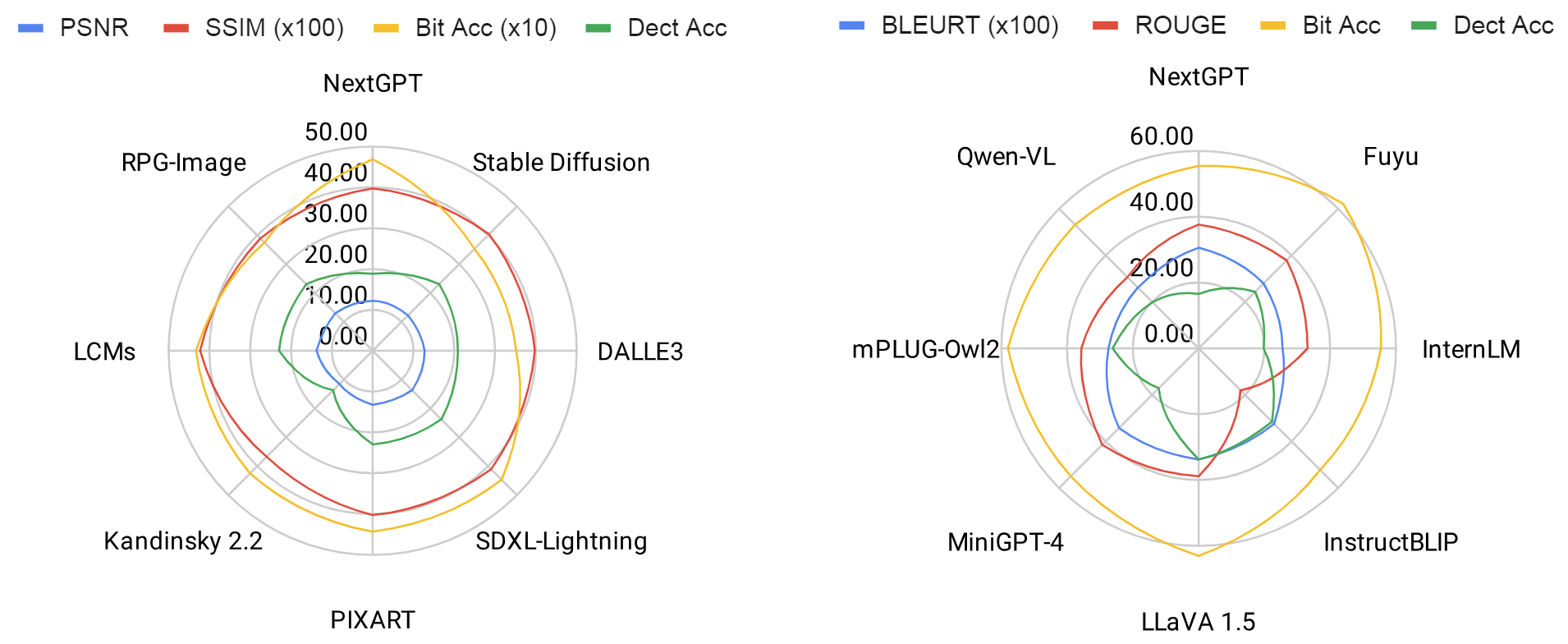

Performance comparison of different models under [left] image perturbations and [right] text perturbations.

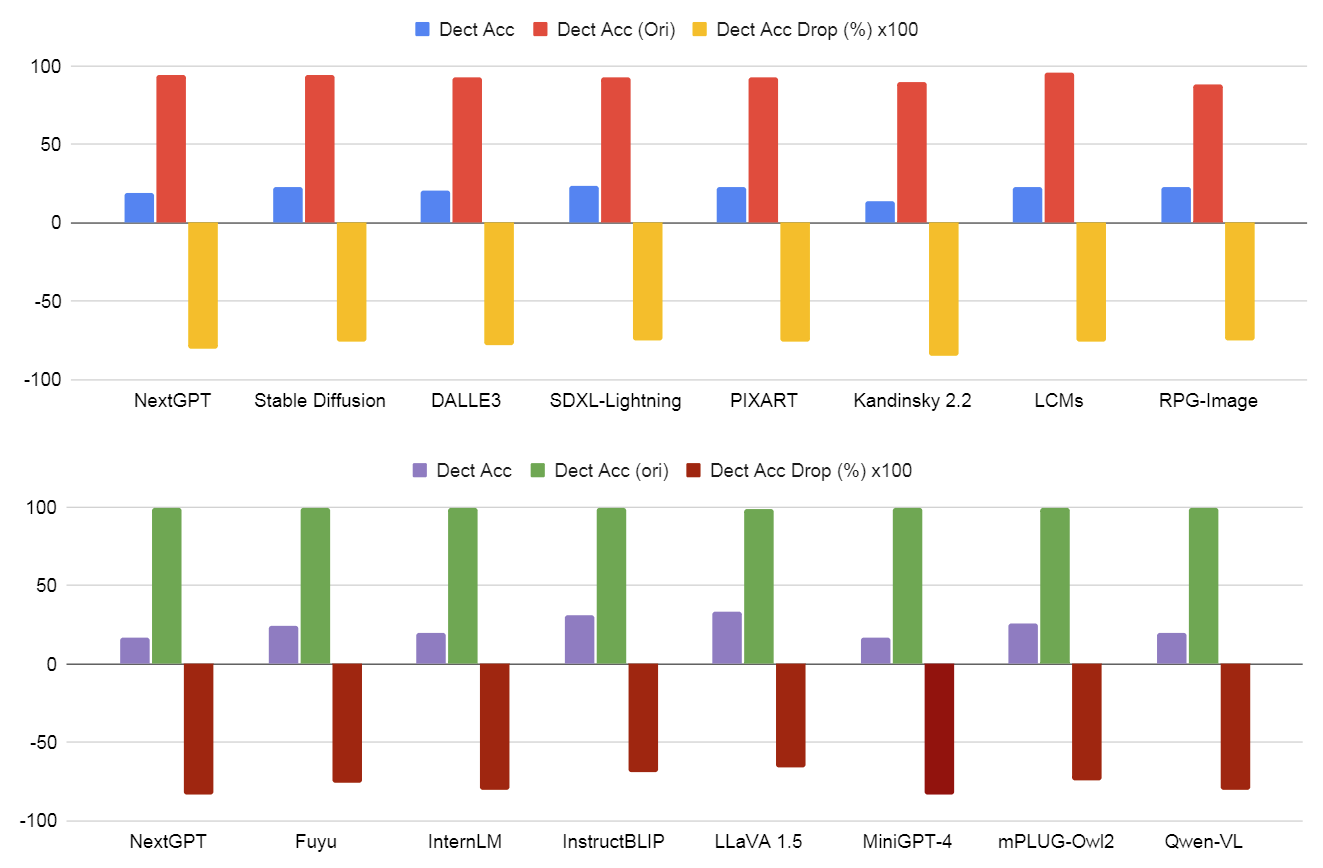

Comparisons of different [Top] image corruption and [Bottom] text perturbation methods. All the results have been averaged on different severity levels.

Model comparisons under [Top] image corrections and [Bottom] text perturbations. All the results have been averaged on the performance under all image/text perturbations.

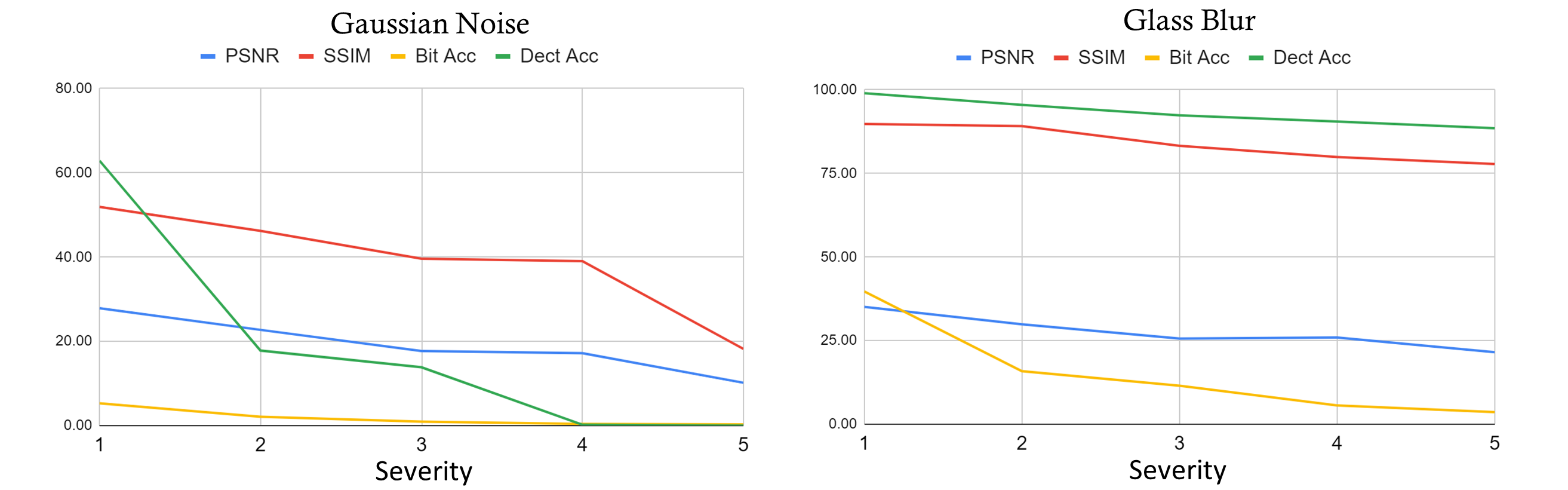

Performance changes with different severity levels under image perturbations

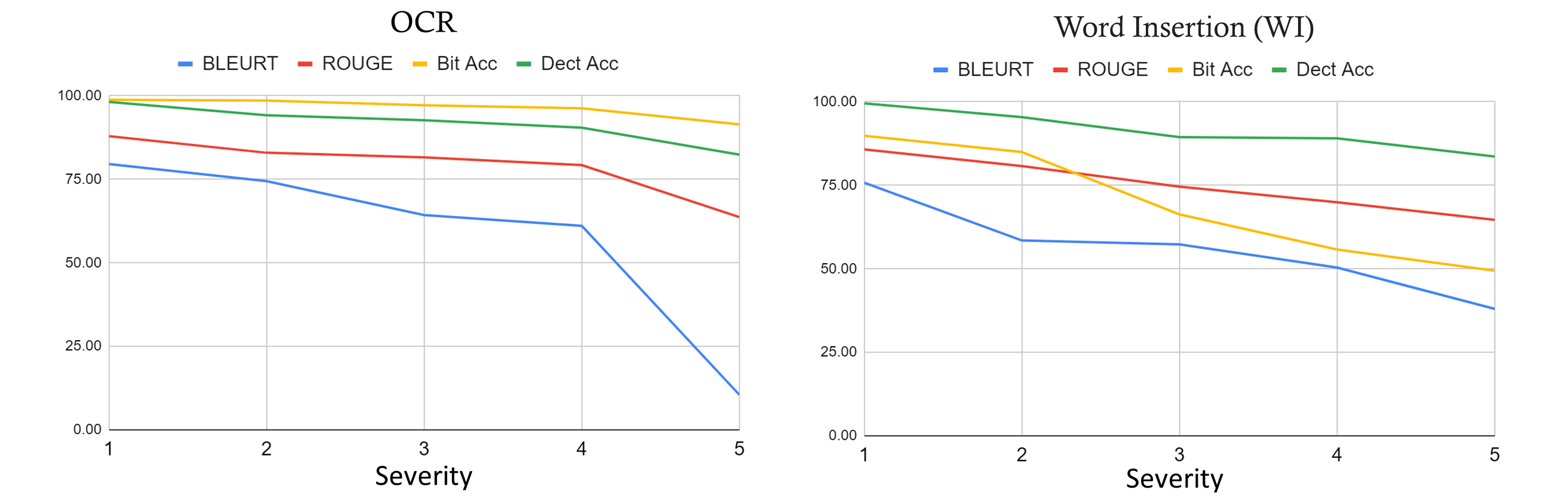

Performance changes with different severity levels under text perturbations